How Does Generative AI Work?

- Generative AI Works

- Jun 24

- 4 min read

A Step-by-Step Guide

Generative Artificial Intelligence (Generative AI) is rapidly becoming one of the most impactful technologies of our time. It transforms how we interact with data, media, and machines – whether in the form of text, images, music, or code.

But how does generative AI actually work?

In this article, we break down generative AI into concrete steps – from data collection to generation – and explain not only the how, but also the why.

Whether you work in tech, design, decision-making, or are simply curious: this guide provides a detailed look into how generative AI functions.

What Exactly Is Generative AI?

Generative AI systems can create new content – such as text, images, audio, code, or video.

These systems actively generate results, as opposed to classic (discriminative) models that analyze and classify content.

Established technologies include GPT (text), DALL·E or Midjourney (image), and MusicLM (audio). Deep learning neural networks, usually based on transformer architecture, form the core of these systems. They learn to understand and reproduce complex patterns from massive data volumes.

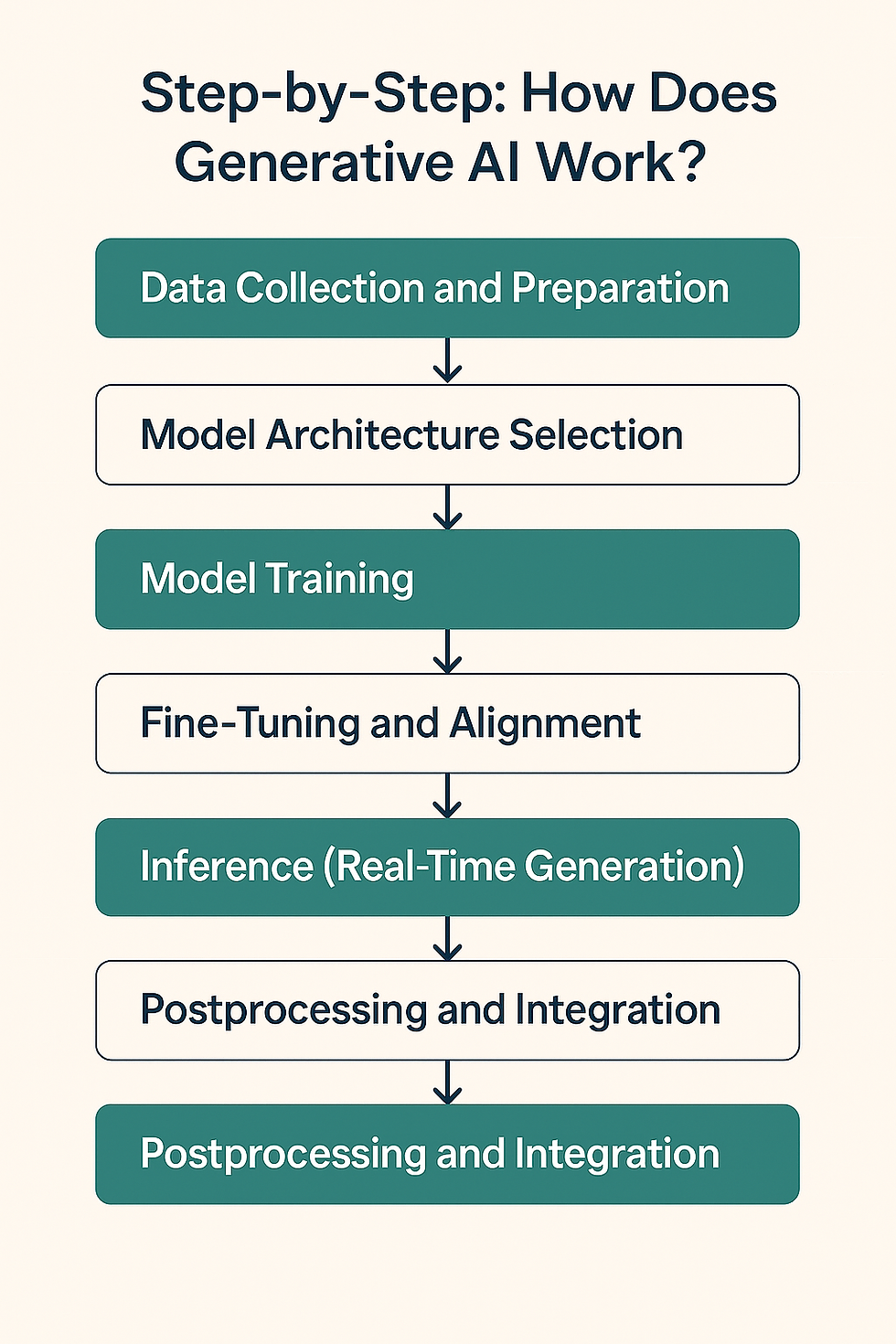

Step by Step: How Generative AI Works

Step 1: Data Collection and Preparation

The quality of generative AI depends on the data it is built upon. Everything starts with gathering large volumes of high-quality data – such as texts from the internet, public image databases, or audio archives.

Before training starts, raw data is:

Cleaned (errors, duplicates, irrelevant content)

Standardized (e.g. image sizes, text encoding)

Transformed into a model-understandable form (tokenization for text, vectorization for images)

Step 2: Model Architecture Selection

At this stage, the type of neural structure is chosen. Since 2017, the transformer architecture has proven powerful due to its ability to capture contextual relationships over long distances.

Examples:

GPT (Generative Pre-trained Transformer) for text

Stable Diffusion for images

Codex for code

These models consist of many layers using self-attention, feedforward networks, and positional encodings.

Step 3: Model Training

Training is the most compute-intensive part. The model learns statistical patterns in data through millions of iterations.

This involves:

Forward pass: Inputs generate outputs

Loss calculation: Deviation from target is measured

Backpropagation: Model weights are adjusted to reduce error

Training often takes weeks and requires massive computing power (GPUs, TPUs, clusters).

Step 4: Fine-Tuning and Alignment

After base training, the model is often fine-tuned for specific areas – such as legal texts, medical knowledge, or creative writing.

So-called alignment ensures that the model adheres to human values, ethical standards, or business goals. A common method: RLHF – Reinforcement Learning from Human Feedback.

Why this matters: Uncontrolled models may generate nonsense or risky content.

Step 5: Inference (Real-Time Generation)

This is the exciting part: the model is used. A user enters a prompt, the model generates a result (text, image, or code).

Typical techniques in inference:

Sampling strategies like top-k, top-p, temperature

Prompt engineering for better outputs

Token-by-token generation with contextual control

Step 6: Postprocessing and Integration

Before the result reaches the user, it's often processed again, especially important in enterprise contexts:

Content filtering (safety, data protection)

Tone, style, or format adjustments

Integration into software or workflows (e.g. via APIs)

Limitations and Weaknesses of Generative Models

Despite their impressive capabilities, generative models are not perfect. To use them strategically, it's important to understand their limitations – technically, ethically, and conceptually.

Hallucinations and "Confidence Without Knowledge"

Generative models create content that sounds plausible but is factually incorrect. This is called hallucination. It occurs because the model has no real-world understanding – it doesn't say "what's true", but "what's statistically likely".

The more confident a model sounds, the more dangerous this becomes – especially in sensitive domains like law, medicine, or science.

Biases and Ethical Pitfalls

Generative models are trained on large (often uncontrolled) datasets and thus inherit embedded biases. This includes:

Gender roles

Cultural and ethnic stereotypes

Linguistic dominance (e.g. English-centric views)

A model may discriminate unintentionally, even without being explicitly programmed to do so. These biases are difficult to detect and even harder to correct, as they're deeply rooted in the training data.

How Generative Models Differ Fundamentally from Classic AI

To truly understand generative AI, one must recognize that it operates in a completely different paradigm than traditional AI systems.

From Logic to Probability

Classic AI (e.g. rule-based systems or decision trees) is deterministic:

"If A, then B"

Clear, traceable rules

Generative models, by contrast, are statistical probability machines:

They calculate what's likely to come next

No fixed "knowledge", but dynamic language modeling

Meaning: they simulate understanding, without truly understanding.

No True Reasoning – Just Pattern Matching

Generative models:

Excel at identifying and imitating patterns

But they cannot truly reason, verify, or plan

They appear intelligent, but they are not agents with intention. This leads to misunderstandings in user expectations:

They write like humans

But they don’t think like humans

Emergence Instead of Programming

Many capabilities of large models (e.g. logical reasoning, coding, translation) were not explicitly trained – they emerged through scale.

These emergent properties are fascinating – but also difficult to control.

Conclusion

Generative AI is not a magical black box – it's a complex yet understandable process. From data curation to model training to user-centered generation, many layers interact to build systems that can write, design, compose – or even argue.

At Generative AI Works, we help organizations not only understand how generative AI works – but how to apply it strategically and meaningfully.

Whether you're building internal copilots, creative automation flows, or first steps toward intelligent agents – understanding the mechanics is the first step to successful adoption.

👉 Want to dive deeper? Get in touch – we look forward to connecting.

Comments